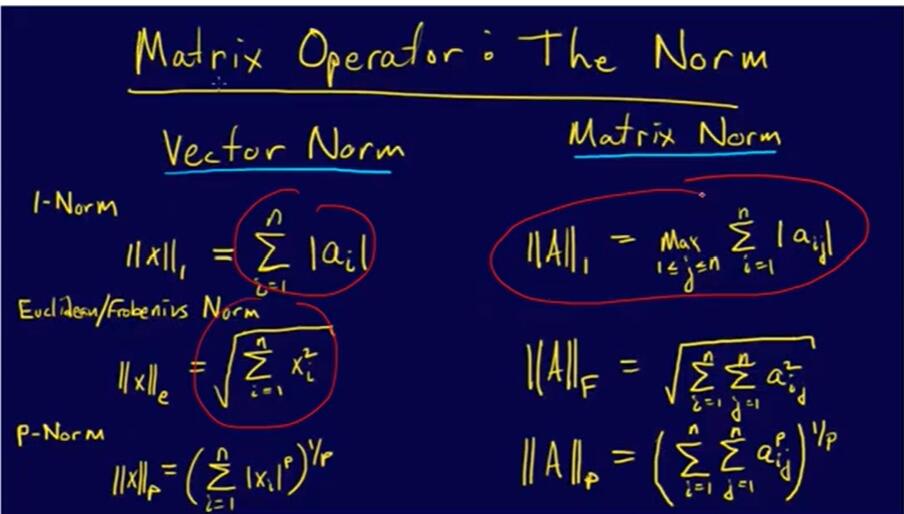

norm范数

与normalize(batch_norm)不同

Vector Norm与Matrix Norm计算的不同

计算没太看懂,后面算的时候再看

min,max,prod,mean等

prod()是累乘

mean()是均值

min最小值

argmin,argmax索引

没有指定纬度,就打平求

argmin(dim=):在dim纬度上求最小值,得到index

dim,keepdim

max(dim=):返回最大值和索引

a=torch.randn(4,10)

print(a)

a.argmax(dim=1)

b,c=a.max(dim=1)

print(b,c)

tensor([[-1.0555e+00, -4.7406e-01, -1.7884e+00, 4.7256e-01, 1.2359e-01,

-1.1320e-02, 8.9937e-01, -3.7503e-01, 4.4091e-01, -1.0220e+00],

[-4.5359e-01, 2.1482e-01, -1.1953e+00, 9.0963e-03, -7.7804e-01,

8.5170e-01, -9.5218e-01, 7.8373e-01, -5.0498e-01, 2.7409e-01],

[ 1.0214e+00, 1.0886e+00, -1.1839e+00, 2.0071e+00, 7.4919e-01,

3.9205e-01, -5.6472e-01, -1.3950e+00, -1.6764e+00, 2.4113e+00],

[ 8.6189e-01, 1.6097e-03, -2.1737e-01, 9.0528e-01, -1.4958e+00,

-1.1681e-01, 7.7766e-01, 1.0796e+00, 4.0260e-02, -1.3723e+00]])

tensor([0.8994, 0.8517, 2.4113, 1.0796]) tensor([6, 5, 9, 7])