1 embed current layers

- Linear(用于全连接层)

- ReLU,sigmoid等

- Conv2d

- ConvTransposed2d

- Dropout

- etc.

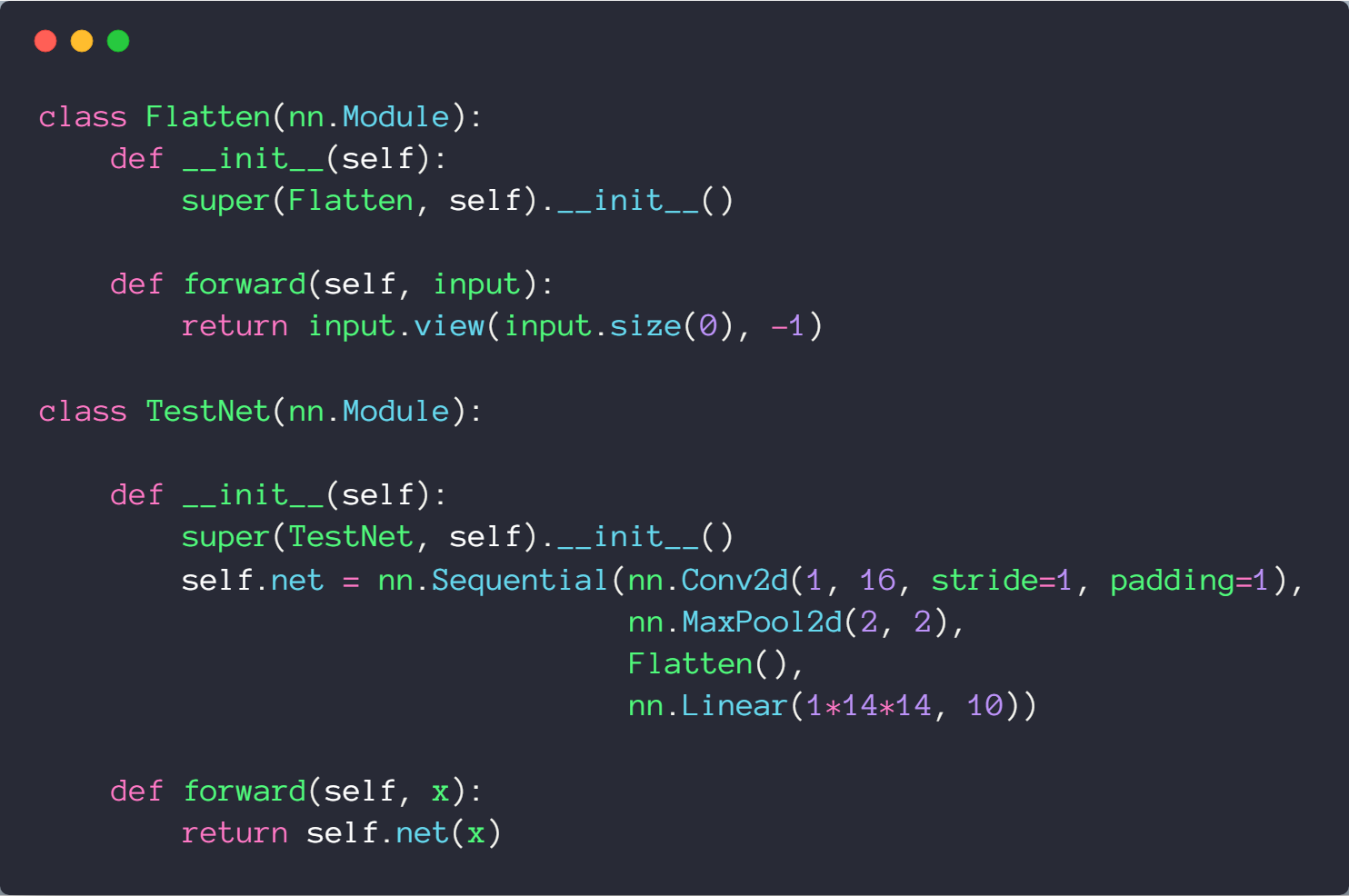

2 Container

net(x)

self.net=nn.Sequential(

nn.Conv2d(1,32,5,1,1),

nn.MaxPool2d(2,2),

nn.ReLU(True),

nn.BatchNorm2d(32),

.

.

.

)

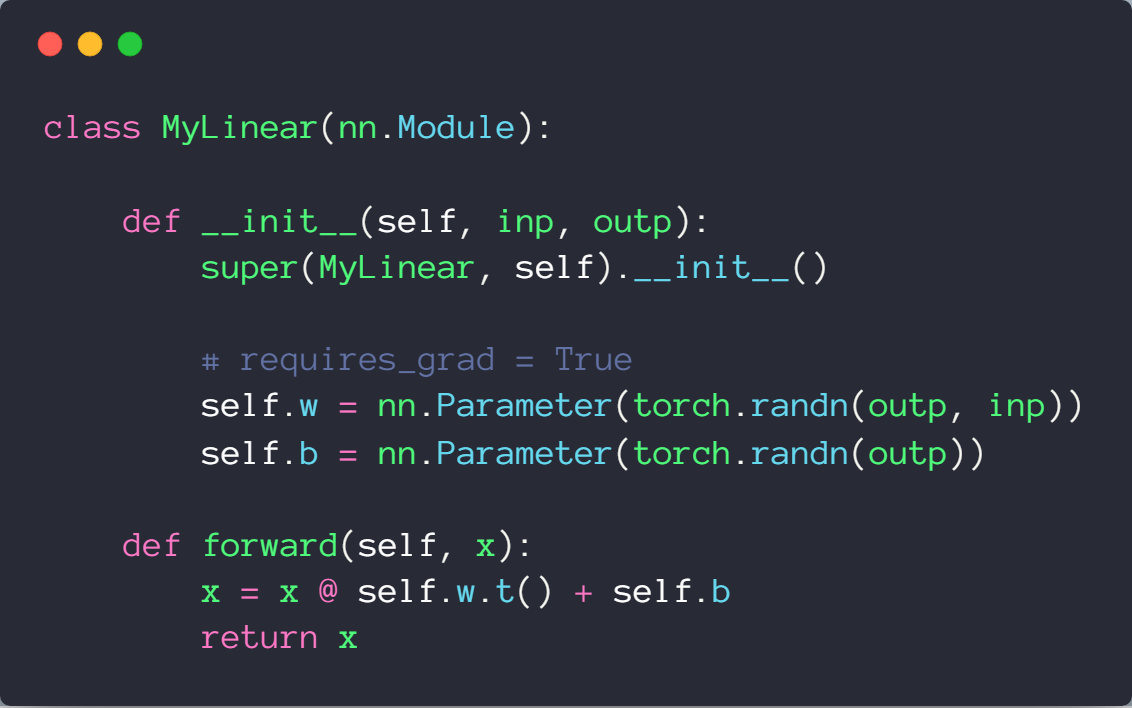

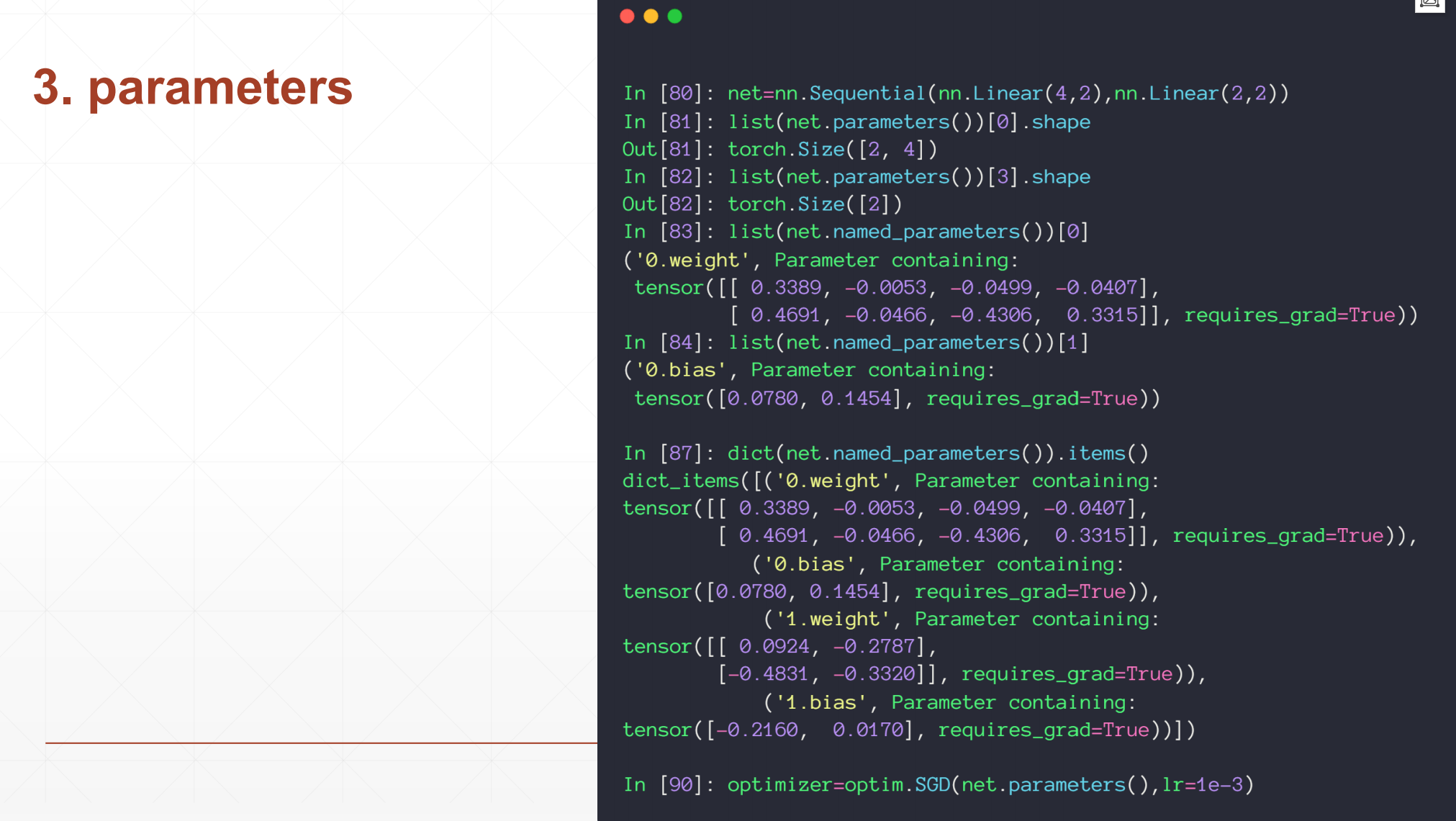

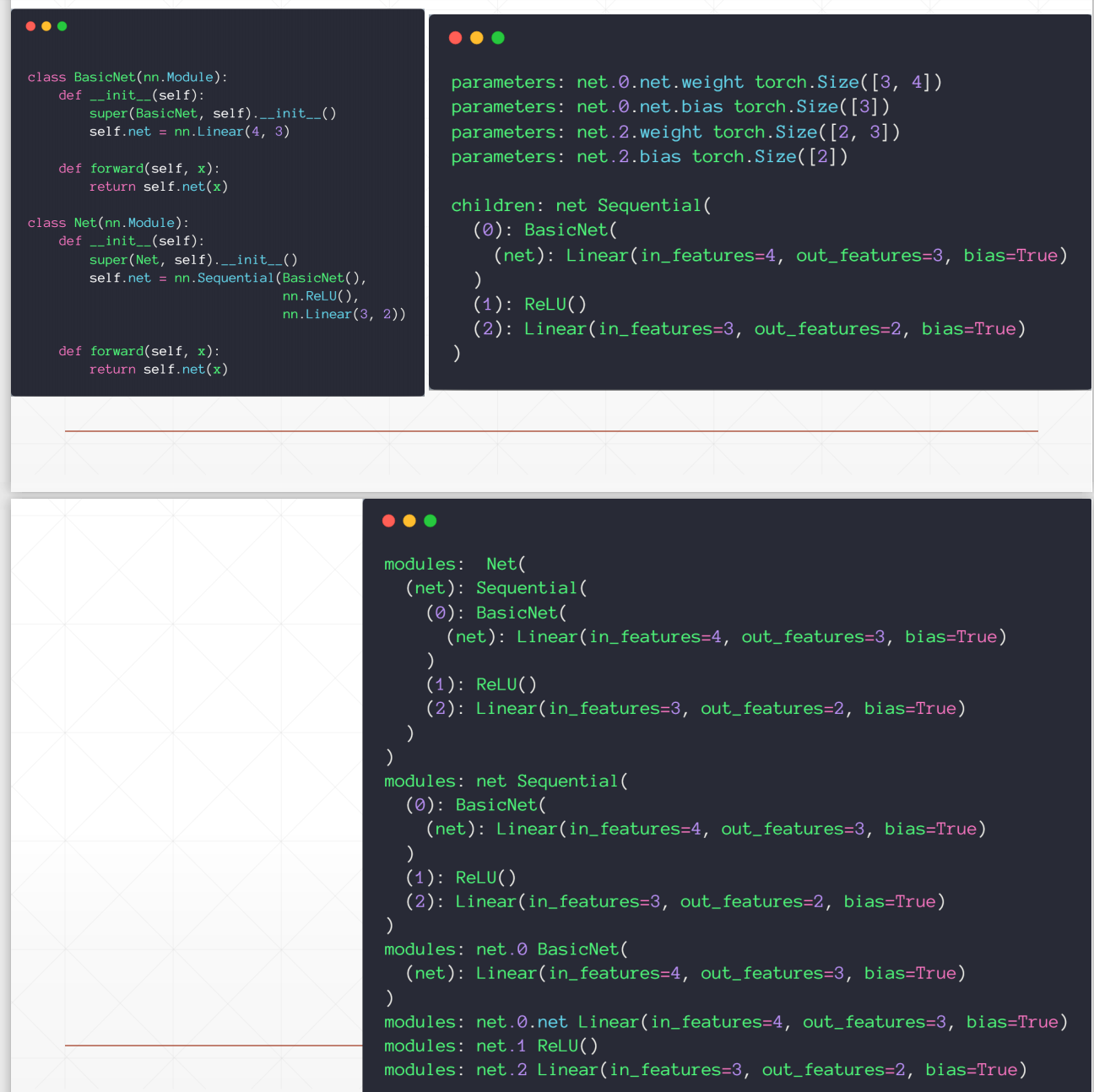

3 Parameters

4 modules

modules:所有的结点

children:直接的孩子结点

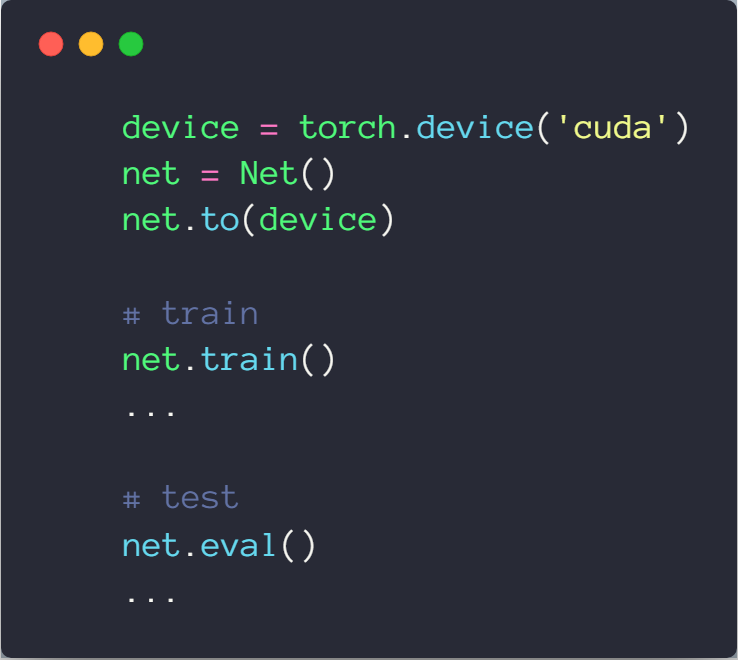

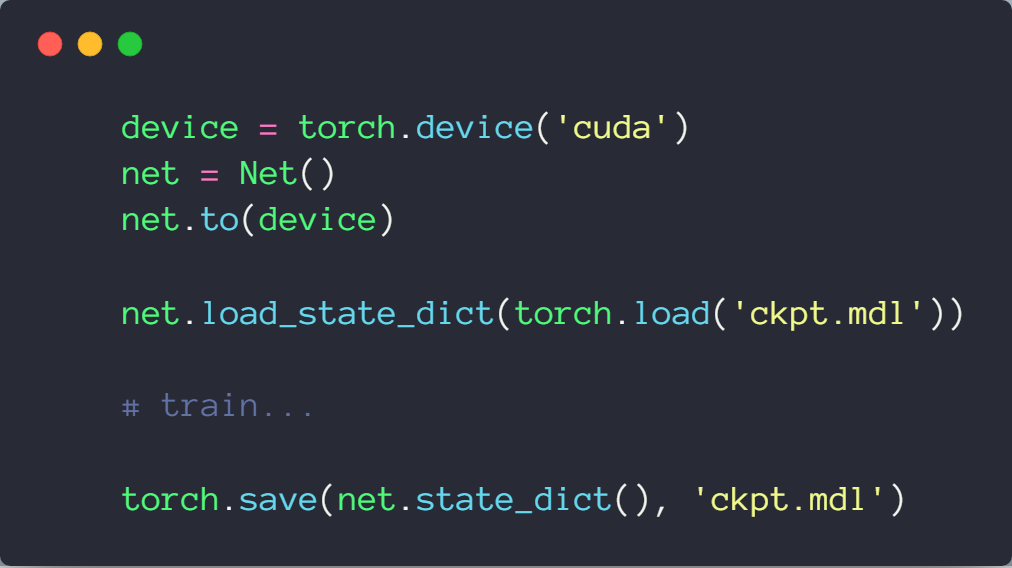

5 to(device)

device=torch.device('cuda')

net=Net()

net.to(device) #实际上没有改变,但是别的tensor.to(device)是改变了的,不是一个东西

6 save and load

7 train,test状态切换